The legislature in which I serve is now considering a distracted driving ban. I’m not going to go into that bill, but it does usher in my next topic. We are about to see a crazy revolution in user interfaces driven by AI. It will render touchscreens useless and change the whole topic of distracted driving. Which will be a good thing.

It’s already cliché to say that AI will change everything. So we will just talk about this one part.

The AI revolution started in earnest a few months ago with the release of ChatGPT. Yes there have been many milestones before that, but I really think ChatGPT will be seen as the turning point that brought AI into the common thought and wove into the zeitgeist of tech. Every day people are already using ChatGPT to get things done.

While ChatGPT is amazing, and the corresponding efforts by Google et al will be equally amazing, probably the most profound revolution will be in the way we interact with technology. I see it as the 3rd big phase of this topic. Let’s look at that, but first, let’s look at the first two phases.

The first phase was stationary and tactile.

Computing tech was stationary primarily because it was huge. It took tons of space. It needed tons of power and cooling. In some mainframe implementations, it actually needed water! Even as it shrunk, it still needed a desk top.

When ‘luggables’ and laptops started to enter the picture, they were still just mobile implementations of a stationary experience. That still holds today.

Computing was also tactile. I’m not sure why this is, but I think it was just assumed to be good design. The keyboard made a satisfying “click” when you used it. The mouse was weighted well, and the buttons gave good feedback. Your fingers could provide information to your brain about what was happening.

And this is an important point. Tactile interfaces could provide feedback and context without looking at the interfaces. Keyboards had a ‘nub’ on certain keys so you could put your hands in position without taking your eyes off the screen or document. The clicks of the mouse and keyboard could report that an input had been received without a visual confirmation.

Tactile interfaces leveraged one of our 5 major senses to interact with the technology. This is a big deal, and it’s an aspect well understood by the gaming community. The tactile interface of your mouse or keyboard can mean (virtual) life or death, and there’s a huge market of expensive implementations.

Losing the tactile interface eliminated an entire sense from our interaction with technology. It has likely cost us hundreds of thousands of lives and drastically reduced our productivity, which leads us to the 2nd phase.

The second phase (which we’re in) is mobile and visual.

In the second phase, technology got small enough to be portable.

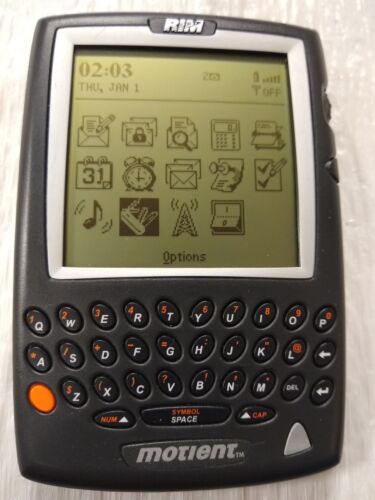

The early part of the second phase maintained it’s ancestor’s tactile aspect.

Because tactile interfaces didn’t require your eyeballs, it didn’t affect your other overall interaction with the real world. Bike couriers would famously ride through large cities while texting on a phone in their pocket. Kids could text in class without being discovered. It was a unique blend of the first and second phase.

Then Apple perfected the touchscreen, and the 2nd phase picked up momentum.

The touchscreen eliminated all of the tactile aspects of the prior world. You still touched the technology, but you had to look at it. And once those eyeballs descended to the touchscreen, they never left. And once those eyeballs were locked on the screen, the level of distraction skyrocketed.

However it’s important to realize that we are not distracted because we want to be. We’re distracted because we have to be. Once the touchscreen entered the picture (get it?), we were forever distracted by design. As our world has become app dependent, it has made distraction a requirement to exist.

Unfortunately, this trend has continued to the point where we’re completely surrounded by touchscreens. There is at lease some recognition that this is a bad thing. And it’s unlikely that this will change on it’s own. Touchscreen design is the hegemony of interaction.

It doesn’t matter, however. AI will prove to be a better way of interacting with technology, and it can replace touchscreens by simply being added to the mix. As a disruptive tech, it will easily crush the touchscreen in terms of interaction.

The Coming age of the AI-driven Interface

I know, I know. Siri stinks. Siri is buggy, gets words wrong, is Apple-centric and is really limited in usefulness. But Siri and Alexa and such are mere shadows of what is to come.

Imagine saying “Hey [phone], can you plan a route to the beach, and try to find a way that avoids normal spring break traffic jams. Oh, and take us through some of the more scenic drives. Maybe a small historic church or small town courthouse. Also, make a playlist for the trip that is good for driving with some of my family’s favorite songs…be sure and add beach boys into the list as we get closer to the coast.”

This would take an hour or two of pre-planning in the current interaction model. It will require many clicks and taps of the keyboard. If you did it on a phone or tablet, it would probably take even longer.

More importantly, it would be impossible to do while driving. And you would be hyper-focused on the interaction wherever you did it. But AI interactivity will completely free up your time and focus. You will be able to ask this question to your car, phone, or device we haven’t contemplated yet. And you’ll be able to do it after leaving while you’ve already got your eyes on the road hands on 10 and 2.

The AI-driven interface will insert itself between you and the technology. It will eliminate the need to touch and look, and will handle all the abstraction of bouncing between apps.

There is much more to think about in all this. The best model is to imagine a college student who is always there ready to interact with your phone for you when needed. Think of how that would change your interaction with day-to-day technology. You’ll only look at the screen when needed, and you’ll only be distracted when you choose to be.