As we move headlong down the rabbit hole that is AI, we are seeing quite a bit of fear and hyperbole. AI will cause the extinction of humanity, the mass elimination of jobs, and enable all sorts of world-ending scenarios.

Of course, these predictions could be true. AI is indeed a world changing, ‘disruptive’ technology. Personally, I haven’t seen a watershed with this much water shedding potential in my 30-year tech career. But I think much of the negativity has a bit of a chicken little tone to it.

The cultural touchstones created in fiction and entertainment haven’t helped. Whether it be the brutal, soulless violence of the Terminator or the quiet, plodding evil of HAL9000, we have been set up to see AI with suspicion. These predictions are a warning, but they are fiction.

While AI’s potential is somewhat unprecedented, there is a historic template for the fear it’s causing. The fear of encryption in the 90’s had much of the same tone.

The advent of high-grade encryption available to the masses was very similar to what we’re seeing with AI. Fast, general-purpose processing power was suddenly available to all, and different applications were also suddenly available.

One of those applications was PGP, short for “Pretty Good Privacy”. It combined RSA (Rivest-Shamir-Adleman) assymetric key encryption with IDEA (International Data Encryption Algorithm) symmetric key encryption to provide an extremely strong encryption application. He released it for free, which meant that everyone was suddenly able to encrypt data with military-grade encryption.

(He originally used home-brew BassOmatic symmetric key encryption but switched after significant holes were pointed out)

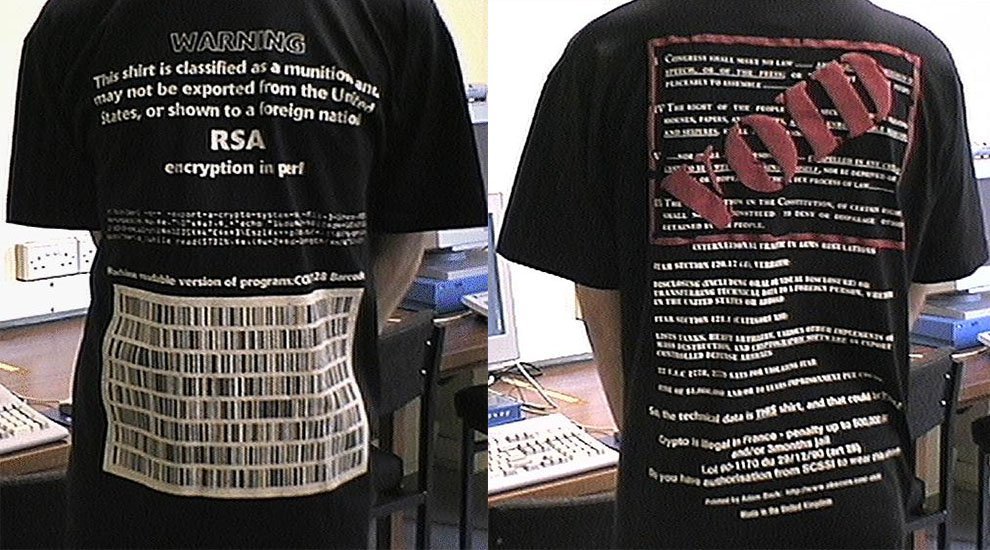

The result was a crazy mismatch in government policy and reality. Encryption was considered a “munition” in federal law. And exporting it could result in heavy fines. So technically, exporting the PGP program outside the US could have resulted in over a million dollars in fines and 10 years in prison for each instance.

Needless to say, this was a stark offset in commercial availability and consequence. The versatility and power of a home computer suddenly gave it the same legal classification as an automatic rifle, an F-16, or plutonium. Historically, even the late Radio Shack didn’t sell such things. Now it suddenly did.

This incongruity with reality was boiled down to illustrative extremes. Emailing or posting a website with 4 lines of perl code could expose you to a million dollar fine and 10 years of jail time. Illegal activity with a computer is now commonly understood, but the idea of a quirky, nerdy home computer being a munition was ludicrous at the time.

The very browser you’re using right now would have been legally the same as a sidewinder missile in the eyes of the law.

While it was widely recognized that this situation was just a little bit crazy–the Gubmit backed off a bit in the mid-’90s–the rhetoric and hyperbole from the Gubmint only escalated. There were many sky-is-falling scenarios about what the world would look like now that everyone in the world could encrypt data.

In 1993 the “clipper chip” was introduced. The Government wanted to put a back door in every encryption device, so they could have access to secure communication:

“Without the Clipper Chip, law enforcement will lose its current capability to conduct lawfully-authorized electronic surveillance.” – Georgetown professor Dorothy Denning

The FBI Director in 1997 famously said:

“Uncrackable encryption will allow drug lords, spies, terrorists and even violent gangs to communicate about their criminal intentions without fear of outside intrusion. They will be able to maintain electronically stored evidence of their criminal conduct far from the reach of any law enforcement agency in the world.” – FBI Director Louis Freeh

Even as recently as 2011 and 2014 law enforcement agencies were saying things like this.

“We are on a path where, if we do nothing, we will find ourselves in a future where the information that we need to prevent attacks could be held in places that are beyond our reach… Law enforcement at all levels has a public safety responsibility to prevent these things from happening.” – FBI General Counsel Valerie Caproni (2011)

And in the modern version of the PGP issue, when the government wanted unfettered access to your iPhone:

“There are going to be some very serious crimes that we’re just not going to be able to progress in the way that we’ve been able to over the last 20 years.” -Deputy Attorney General James Cole (2014)

It’s not hard to see the parallels in rhetoric in what we’re seeing in AI. It’s in our nature to have extreme visions of a future where the worst-case-scenario reigns. But it’s also a tool used in creating a policy that someone wants. Sometimes they skip the rhetoric and just tell you what they want:

NSA Director Michael Rogers (2014): Speaking at a cybersecurity conference: “I don’t want a back door… I want a front door. And I want the front door to have multiple locks. Big locks.”

So, it’s worth noting that, like encryption, the extreme rhetoric we’re seeing in AI is probably not just to get clicks and readers. It reflects a policy push, both overt and covert. The warnings in public were matched by very serious efforts behind the scenes to address the fear of a world with readily-available, strong encryption.

These efforts were revealed in the Edward Snowden leaks. They included secret partnerships with private companies, extensive efforts to break encryption, and covert efforts to sabotage proprietary and open-source projects. This could be the subject of an entire post. But you can bet similar efforts are being implemented due to the perceived threat of AI.

Did these extensive efforts help us? It’s impossible to know. Like the barking dog who thinks his efforts thwart a mass murder by the mailman, it could be an illusory correlation. Or the end of the world could have been prevented multiple times.

So as we move into the world of AI, we may be moving into unprecedented scale of impact. However the situation is very precedented. It’s best to push past the scary rhetoric and get into the messy world of actual analysis and prediction.

We should also understand that massive, massive amounts of capital and human effort are working behind the scenes in ways we may never know about.