Like any child of the 80’s who’s into tech, I’m fascinated by the idea of self driving cars. The only thing cooler would be flying cars, but it seems we’ll have to keep crawling before we can fly.

Thanks to Google and Tesla, self-driving automobiles are now a real possibility. In fact, Tesla’s communication and Musk’s relative record of success have made it more than a possibility. It’s an expectation. There is now a baked-in expectation that self-driving cars will revolutionize the world of transportation.

However, the reality is proving to be more difficult. Delays and complications abound. And predicting timelines has become foolhardy.

The obvious issue is that driving is very, very complicated and unpredictable. So much so that human minds get routinely confused. It just makes sense that artificial minds will have the same issues. It makes sense that this is a very difficult problem to solve and it will take awhile to do so.

But there may be ways to speed up the process. And there may be tragic events that will suddenly slow down the process by many years or decades if we’re not smart about all this. Let’s start with the latter.

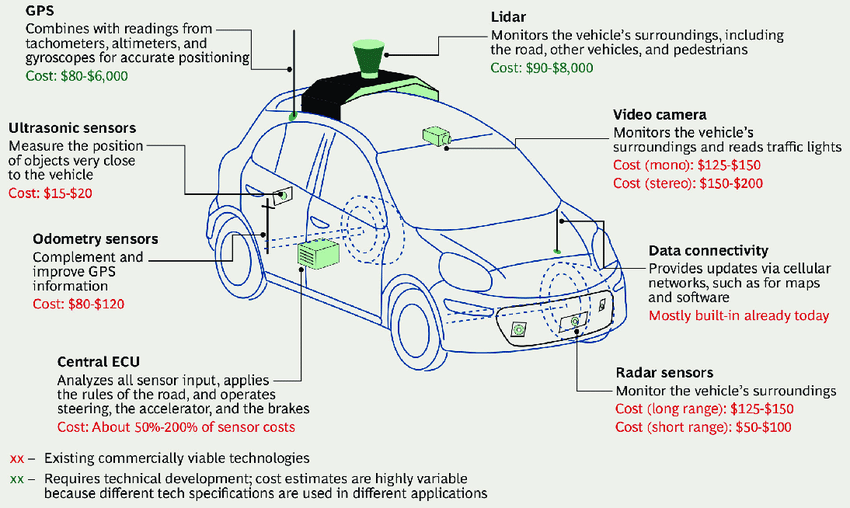

Lidar and Radar and Cameras, oh my! The complexity in feeding information to self-driving AI is very complicated. It should give us new appreciation for our own 5 senses. Source: Boston Consulting Group

Lidar and Radar and Cameras, oh my! The complexity in feeding information to self-driving AI is very complicated. It should give us new appreciation for our own 5 senses. Source: Boston Consulting Group

Artificial Intelligence needs tons of data to learn. This means that AI engines will have to spend huge amounts of time to get the tons of data needed to learn how to drive our roads. I think we’re learning that our roads are more complicated and unpredictable than we thought. Which means the AI behind autonomous driving will take more and more data.

Telsa uses “shadow mode testing”, in which the AI engine pretends to drive a car, and its decisions are tested against the actions of a real driver. The large number of Telsa drivers helps in this regard.

But this illustrates the problem. Artificial Intelligence and machine learning depend on mistakes. The systems make mistakes and learn from them. They makes an enormous amount of mistakes. The more complex the environment, the more data you need. And the more data you need, the more mistakes will be required to generate that data.

Yet driving is dangerous. A mistake in driving can cost lives. So the question quickly becomes “what is our tolerance for mistakes by self-driving cars?” Are we willing to sacrifice lives so that cars can learn to drive themselves?

I think the answer is very likely to be “no”, and probably a more resounding “no” than we anticipate. There have already been some episodes of loss-of-life related to autonomous cars. And there have been odd attempts to cover up some close calls. But the day we have a high profile event–a loss of a family of four, a school bus accident, an elderly veteran run over–public (and legislative) opinion will shift quickly against the current tech.

An episode like that will be tragic for the individuals involved, but it will also set the autonomous vehicle effort back for decades. People are too important, and this tech has too much potential to let that happen. So what can we do?

When it comes to autonomous driving, all the attention is on the cars themselves. That make sense given the ‘cool factor’ and the agency of the companies making the cars. This is where the work is.

Hardly any attention is paid to the technology of roads themselves. Even less attention is paid to the technology of planning, design, and construction of the roads. It’s just accepted that the roads are what they are.

A huge part of advancing autonomous vehicles, I think, is to develop a set of standards and guidelines that will certify a road for autonomous cars. Autonomous driving should require this certification. It would include things such as:

- Universal, standard lane markers, including curb and hash marks in turns

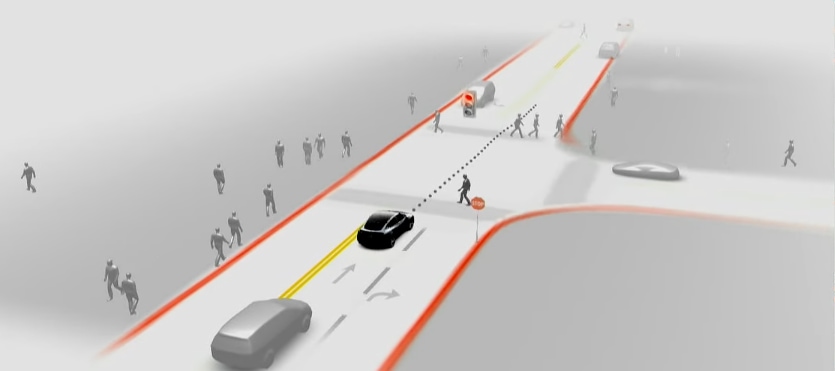

- Assisting sensors in blind corners and unprotected turns

- Redesign of crosswalks and bike lanes to protect pedestrians and bikers

- Standardization of other vulnerable areas such as loading areas for passengers

- Indicators of places where pedestrians and other vulnerable individuals are likely to be present. “high caution” areas that will tell AI to enter a heightened state of precision and sensitivity.

- Appending or tagging some of this information to the GPS standards

Federal and state highways would be pretty easy to outfit, as they already follow standard guidelines. The obvious issue will be local and rural roads.

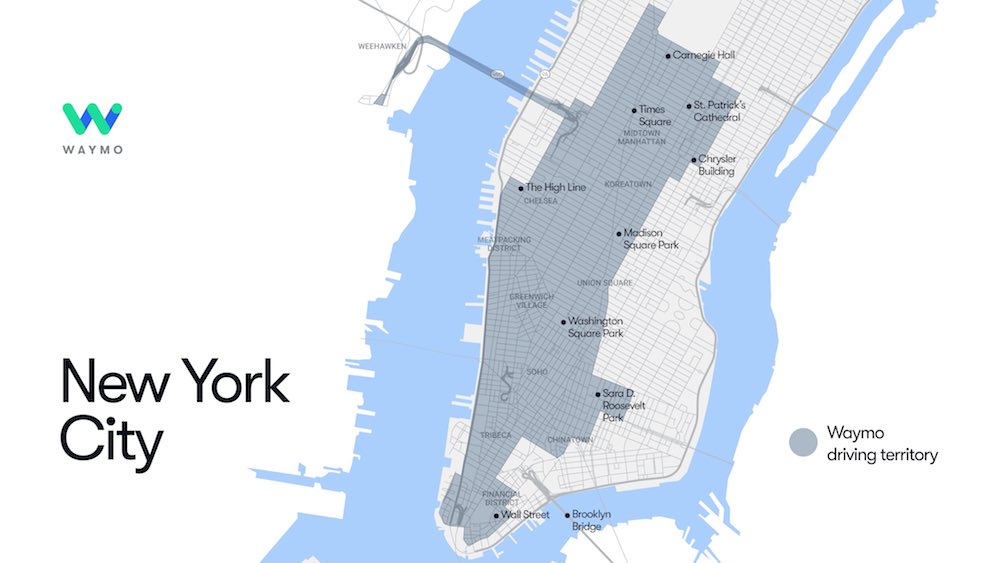

Google’s self-driving project addresses part of this situation by mapping every area’s detail ahead of time. This approach has a similar effect, in that it ‘certifies’ every road by documenting its features ahead of time. There are a couple problems with this, however.

First, it is a daunting task. Even with the resources at Google’s disposal, it is nearly impossible to map every road. Indeed, Google street view still misses huge chunks of coverage despite the significant effort to cover everything. And you can’t underestimate the tendency in some places to consider mapping a privacy concern.

Second, streets change and those changes could have significant implications. Using street view as a reference, it’s not uncommon to find places that haven’t been visited for many years…again despite a very comprehensive effort by Google.

Adding and adopting street standards and certification would help Google’s approach and speed up the process.

There are no guarantees in life. Walking out the door has its own level of risk. But when it comes to life-and death safety, we should mitigate these risks as much as practically possible. When it comes to AI, autonomous driving, and self-driving cars, I think it’s obvious that a set of standards and a requirement for certification is required. Moving in this direction now will allow us to leapfrog both delays in adoption, and tragedy in achieving adoption.