Previous articles:

So up to this point, we’ve highlighted the wonky technical stuff illustrating just how resilient the Internet is. And how intentional that resilience is. There is a tremendous amount of intelligence and money applied to make sure that communication amongst many entities can happen, no matter what.

Now we arrive at the final layer, the application layer, and we will feel right at home at this layer. This layer is where humans interact with the technology. It’s where bazillions of dollars are made. It’s where all the magic happens and what all the fuss is about. Without layer 7, none of the other 6 layers matter.

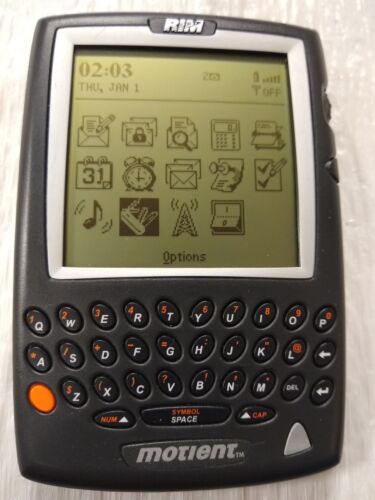

The first major applications were things like:

- Telnet (1969) which allowed users to remotely access a computer system as if they were sitting in front of it.

- Email (1971) created by Ray Tomlinson, which upgraded his prior mainframe-only email to work over the network.

- FTP (1971) File Transfer Protocol, somewhat self-explanatory

- Usenet (1979) a bulletin board like system that allowed users to post, read, and reply to public messages

- IRC (1988) by Jarkko Oikarinen, Internet Relay Chat allowed users to join chat rooms and interact with each other directly.

- Gopher (1991) by Mark P. McCahill, was a spiritual pre-cursor to the web, allowing users to find documents.

These early applications laid the groundwork for the rich ecosystem we have today. However, they were relatively static and specialized in their functions. Each served a specific purpose: Telnet for remote access, Email for messaging, FTP for file transfer, and so on. While groundbreaking for their time, these applications were limited in their flexibility and scope.

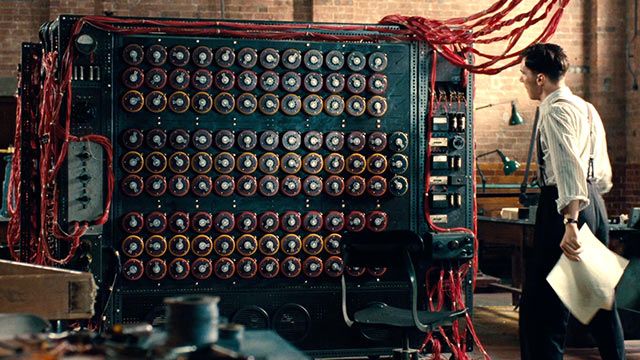

Then came the game-changer: HTTP (Hypertext Transfer Protocol) and the World Wide Web.

Developed by Tim Berners-Lee in 1989 and publicly released in 1991, HTTP and the web created the ultimate flexible addition to Layer 7. Unlike its predecessors, the web wasn’t designed for a single, specific purpose. Instead, it provided a general-purpose platform that could be adapted for almost any type of application.

What made HTTP and the web so revolutionary was their simplicity and extensibility:

- Hypertext: The ability to link documents (which eventually became pages ) together created a web of information, allowing users to interact with documents.

- Statelessness: Each request-response cycle is independent, which simplified server design and allowed for easy scaling.

- Content Types: HTTP could serve various types of content (text, images, audio, video), making it incredibly versatile.

- Client-Server Model: This separation of concerns allowed for rapid innovation on both ends.

The web’s flexibility meant that developers could create applications that were previously unimaginable. Suddenly, you could have:

- Online stores (Amazon, 1994)

- Search engines (Google, 1998)

- Social networks (Facebook, 2004)

- Video streaming platforms (YouTube, 2005)

- Microblogging services (Twitter, 2006)

All of these diverse applications run on the same underlying protocol and infrastructure. This flexibility allowed for rapid innovation and democratized app development. Anyone with a basic understanding of HTML and a web server could create content accessible to millions.

Moreover, as web technologies evolved (with the introduction of JavaScript, CSS, and more sophisticated backend technologies), the web became even more powerful. Modern web applications can do almost anything a desktop application can do, from complex data processing to real-time communication.

Layer 7 is incredibly flexible. It’s the wild west of the OSI model, where applications can do pretty much anything they want. Want to create a social media platform? Layer 7. A video streaming service? Layer 7. A decentralized cryptocurrency network? You guessed it, Layer 7.

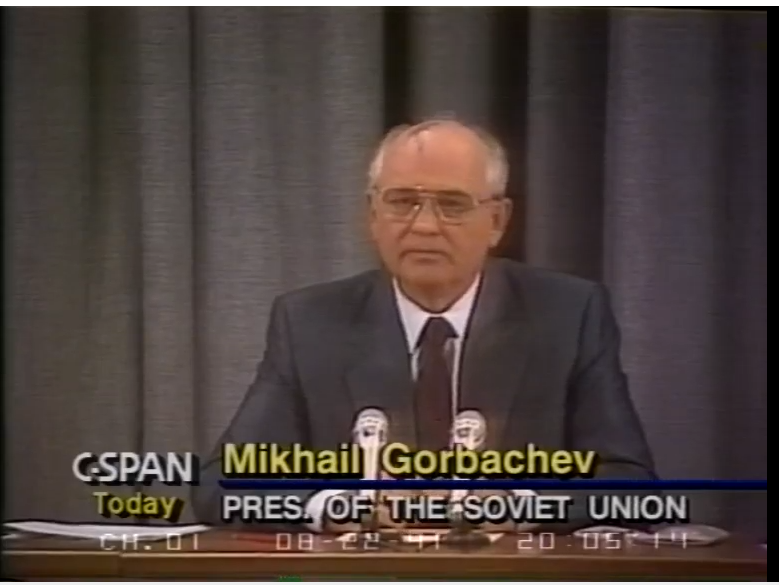

This flexibility and power is a double-edged sword when it comes to freedom and democracy. On one hand, it has given voices to millions, allowed for the free flow of information on an unprecedented scale, and enabled incredible innovation that can empower individuals and communities. On the other hand, it has led to massive, centralized platforms that now control much of our online experience, with near-total control over what they present to us.

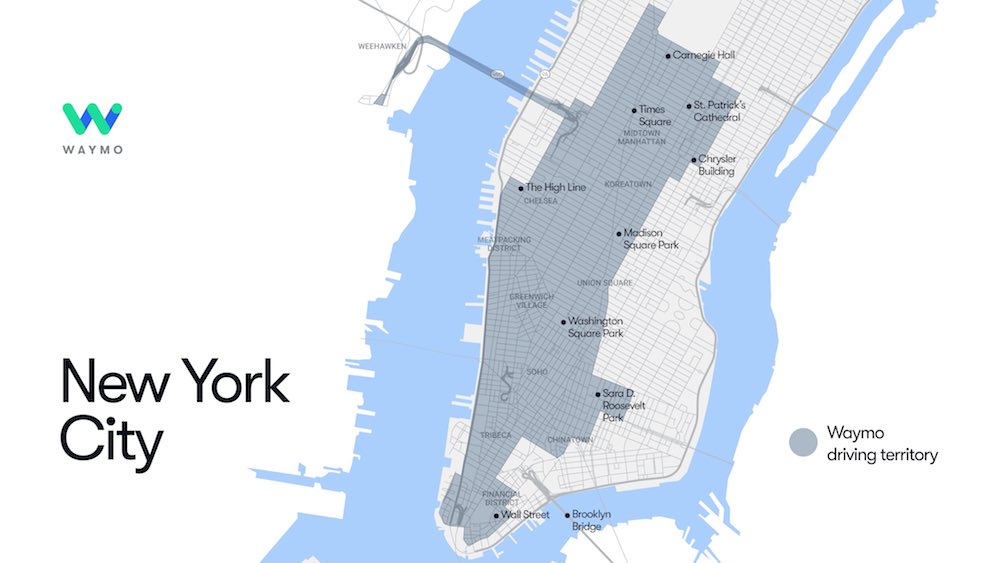

So despite this flexibility and capacity for innovation, we’re increasingly using fewer and fewer sites for more and more of our online activities. Facebook, Google, Twitter, TikTok – these giants have become the primary gateways through which many people experience the internet. This concentration means these limited sites have an outsized influence on what information we see and how we interact online.

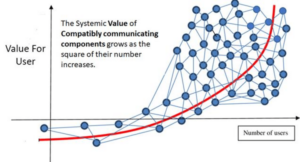

This is all because of something called Metcalfe’s Law. This law states that the value of a network is proportional to the square of the number of connected users. In other words, the more people use a site, the more valuable it becomes due to the network of people it provides. This creates a powerful feedback loop – people join because that’s where everyone else is, which makes the site even more attractive to new users.

So as use of these centralized websites increases, their ability to control content also increases. They have used this power in questionable ways, even colluding with the government to determine what people see.

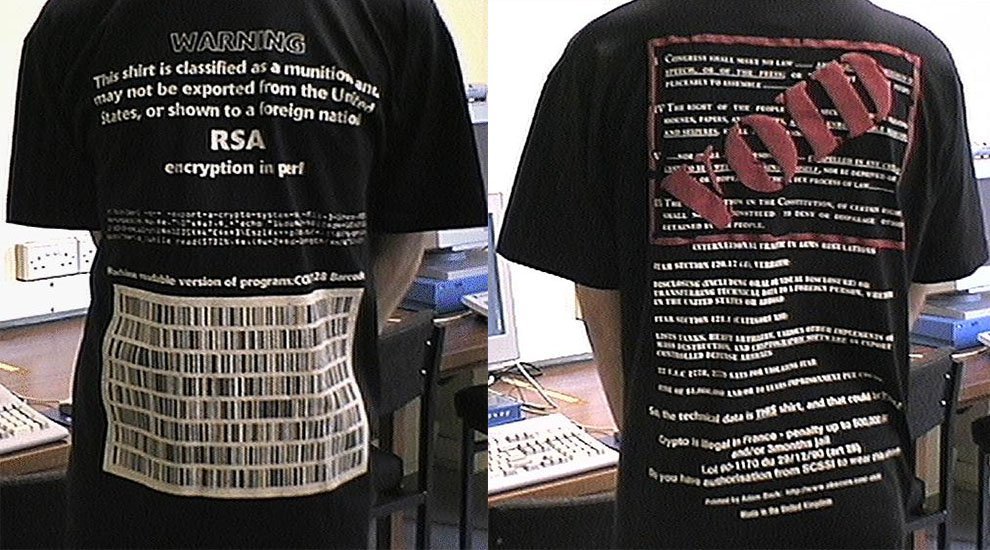

But while some have tried to sue and others have tried passing laws, the real answer lies in the technology itself. While layer 7 makes it possible to censor a single site, the rest of the OSI ‘stack’ makes true censorship nearly impossible. “The Net interprets censorship as damage and routes around it.” (A quote attributed to John Gilmore in the early 90’s.) And the ability to route around it is significant.

Next we’ll talk about ways the internet could heal itself from it’s current ailments.